Hidden Pasts, Digital Futures: Generations from Alida Horse on Vimeo. (See an SFU News story profiling filmmaker Alida, whose video about the Generations exhibit (above) won 1st Place in the 2016 International Conference on Computational Creativity, Paris).

Generations was a part of Hidden Pasts. Digital Futures – digital art and VR exhibition featuring the works by Jeffrey Shaw, Robert Lepage, Stan Douglas and more.

Curated by Kate Hennessy, Gabriela Aceves and Thecla Schiphorst, Generations engages notions of materiality, embodiment, subjectivity, and memory as they are expressed in contemporary generative art practice. The works use computation to endow machines with agency for the autonomous production of outcomes that are generally outside of the control of their makers. As a play on programming iterations, life cycles, artificial intelligence, and creative possibilities, these works generate spaces for affective engagement between machine and information, subject and object, individual and avatar, and signal and noise. Landscapes, bodies, movements, sounds, and materials are transformed by their interactions with code and its generative potential.

The exhibition was open until October 16, 2015 at the SFU Woodwards, Wong Theatre Lobby and features: Arne Eigenfeldt, Matt Gingold, Thecla Schiphorst, Philippe Pasquier, Jim Bizzocchi, Steve DiPaola, Miles Thorogood.

Bringing out the Ghosts

(Code based Computer Generated Video)

Bringing Out the Ghosts takes as its starting point perhaps one of the most clichéd forms of the digital age – the social media selfie. Using generative algorithms that iteratively expose their ghost-like ambience, this work pulls away the veil of personality to reveal ghosts within the representational form. This work uses one of most traditional art forms –the portrait– as its source revealing the algorithmic machine that creates these ghosts through a layered dissolution of form. These ambient generative videos evolve the human mask full circle, situating the ghost and the machine together.

Steve DiPaola is an active artist and scientist who explores concepts of the virtual. Steve creates and designs virtual human, expression and creativity systems both in his research and his artwork. His Artificial Intelligence software systems use code based on models of cognitive and biological processes.

Matthew Gingold, Thecla Schiphorst, Philippe Pasquier

Longing + Forgetting

(Generative video installation)

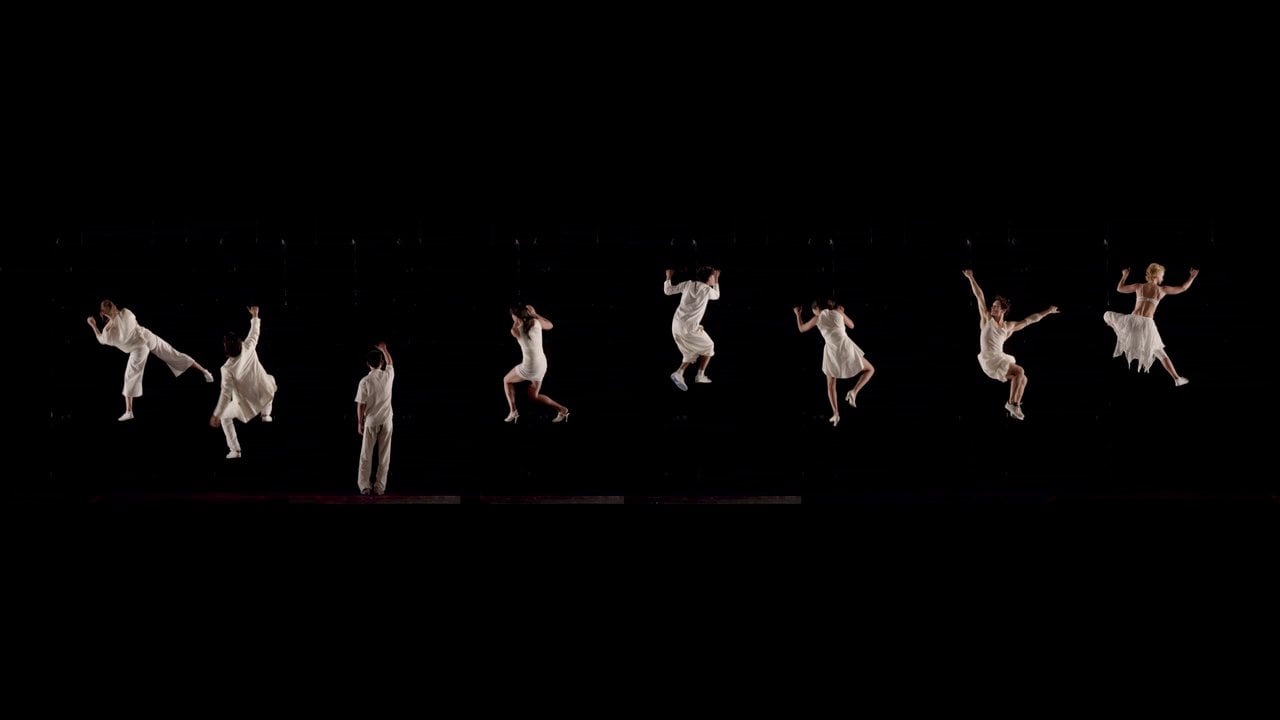

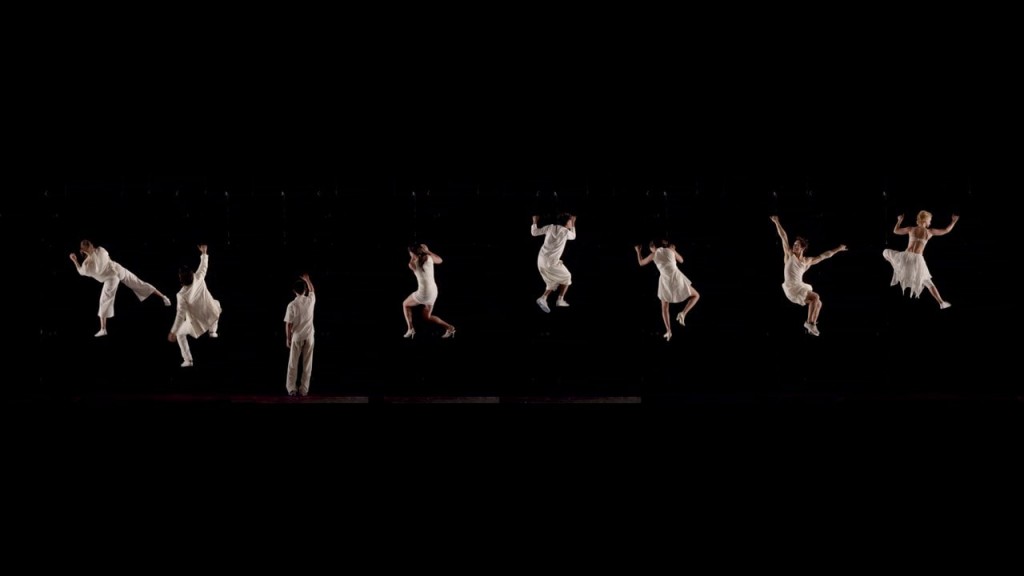

Longing + Forgetting is a generative urban screen video installation that explores movement as a collage of experiences, both physical and personal, provoking chance encounters between performers expressed as video agents. Each performer/agent moves in a solitary path on the facade, as a separate instance within this symbolic realm, exploring precarious movement paths inhabiting landscapes that are both architectural and emotional.

Longing + Forgetting rests upon the notion of video agents combining strong concepts from agent behaviour and artificial intelligence with somatically-based physical movement language. Video agents’ movement vocabulary is activated within a motion graph; agents live their life on the façade inhabiting the windows, doors, ledges or columns available within the architectural facade, performing activities such as sleeping, standing, crawling, climbing up walls and into windows or embracing and even falling.

Matt Gingold has been developing and implementing technology in performance and installation for the last 15 years, spanning theatre, dance, museum and gallery contexts. He is particularly interested in the social and cultural meanings that technology creates– and how these can be harnessed in the production of unique, live(d) experience.

Thecla Schiphorst is a Media Artist whose background in dance and computing inform the basis for her media arts practice. She is the recipient of the 1998 PetroCanada Award in New Media, awarded to Canadian Artist’s for their contribution to innovation in art & technology.

Philippe Pasquier is both a scientist specialized in creative computing and a multi-disciplinary artist/ His contributions range from theoretical research in artificial intelligence, multi-agent systems and machine learning to applied artistic research and practice in digital art, computer music, and generative art.

Jim BIzzocchi, Arne Eigenfeldt, Miles Thorogood

Seasons

(Original computational software, single channel video, stereo sound)

Seasons is an audio-visual experience that models and depicts our natural environment across the span of a year. The work is a real-time cybernetic collaboration among three generative systems: video, soundscape, and music. These systems run continuously to build a seamless media output for a single high-definition screen and multi-channel sound system.

Seasons is part of the SFU Generative Media Project, led by Jim Bizzocchi with co-applicants Arne Eigenfeldt and Philippe Pasquier.

Credits: Film, Jim Bizzocchi; Sound Composition, Arne Arne Eigenfeldt and Miles Thorogood; Team Assistants, Jianyu Fan, Le Fang, Matt Horrigan and Justine Bizzocchi, assistants; Video Images, DOP Glen Crawford, Samantha Derochie, Jeremy Mamisao, Julian Giordano, Jenni Rempel, Adrian Bisek and Jim Bizzocchi.

Arne Eigenfeldt is a composer of electroacoustic music whose research investigates generative music systems. His music has been performed around the world, and his collaborations range from Persian Tar masters to contemporary dance companies to musical robots.

Jim Bizzocchi is a filmmaker whose research interests include the aesthetics and design of the moving image, interactive narrative, and the development of computational video sequencing systems. His Ambient Video films and installations have been exhibited widely at festivals, galleries and conferences.

Miles Thorogood is a doctoral researcher exploring relationships between machine learning, artificial intelligence, soundscape, and creativity. His works include sound installation, locative media, and generative audio-visual manifestations shown in Australia, Europe and North America.

Arne Eigenfeldt

Roboterstück / MachineSongs

Roboterstück is an installation for nine agents performing Ajay Kapur’s NotomotoN (an 18-armed robotic percussionist), in which a new composition is generated on command, with each performance usually lasting between 3 and 5 minutes. The agents negotiate a texture from 16 possible combinations – based upon the following features: slow/fast; sparse/dense; loud/soft; rhythmic/arrhythmic. Once a texture is agreed upon, they perform their interpretation of that texture. Agents become bored easily, and may drop out; alternatively, they may enter at any time. Once half of the agents have decided that they are no longer interested in the specific texture, the section stops, and a new texture is negotiated. When the same texture has appeared three times, the performance is complete.

Arne Eigenfeldt is a composer of live electroacoustic music, and a researcher into intelligent generative music systems. His music has been performed around the world, and his collaborations range from Persian Tar masters to contemporary dance companies to musical robots.