Numeros tene, res sequentur.

Stick to numbers and (all) things will follow.

What is the computer?

It’s not a tool because that would imply a context-dependent usefulness or handiness.

It’s not a machine, at least not in a linear sense with easily defined outputs corresponding to inputs. It is not predictable, it is not independent of previous tasks or information. It is recursive and generative.

Is it a medium? Geoffrey Winthrop-Young (2010) questions it because “it violates, annuls, or supersedes notion of communication, remediation, and intermediality that are presupposed by most conventional definitions of medium”. As the computer has the power to recast or simulate most other media, what is its own mediality? How does one study and define the mediality of the computer, as it is the Turing machine, the universal machine. It can be anything and everything. Does it have any specific mediality?

Winthrop-Young states that computers, like all other media are:

“Most powerful when least noticed; and they are least noticed when most empowering.”

Marshall McLuhan (1964) talks about human narcissism and how media that extends our body and mind grows our ego, self-assurance (and arrogance?) because it empowers us and comforts us without taking credit or being noticed. Does the computer help us live up to our potential or do we only own this potential thanks to the computer? This lends well to extend into AI, if the computer enables us/all of us/anyone, is the human a key part of the equation or can it be removed? In everyday life, these questions are seldom asked because our computer-fueled potential and performance is comforting and self-inflating. Computational art has a key role here and a rich history of critically dissecting and exposing our relation to computers.

Why is it important? Is there a need to understand computers, can’t we just use and exploit them?

Well, inevitably they already have and will continue to impact our individual agency and the structure of our society.

Curiosity Cabinet for the End of the Millennium by Catherine Richards comments on the prevalence of technology and how we are approaching a state where the only way to avoid it is by locking yourself in a Faraday’s cage. And let’s think about it, how was your day so far? It probably looked something like in the video Error 404 by Claire Dubosc.

Just like in the video which is compiled of over 200 clips from YouTube and Vimeo, you probably woke up by an alarm clock or phone, which means that software wakes you up as opposed to natural cycles such as the sun. Your home’s water, heat and electricity are all most likely controlled in big-scale software systems. When you’re driving to work software tells you when to stop and you follow its instructions. Same goes for the pedestrian, software tells me where I can walk and where I can’t, and I obey. If you took the Skytrain you certainly were at the mercy of computers, because humans are unreliable. All the while you carry and quite possible interact with your personal, always-on always demanding attention smartphone. This computer knows everything about you, who you are, what you like, what you do, where you do it. It extends what you can do, but does it really make you more powerful? Take away the phone and the empowerment disappears, this scenario is what is implied at the end of Dubosc’s video when the clips are replaced with error messages.

To make more sense of the computer, we try to divide the term into hardware, software and wetware. So how does one define these terms?

Well, there are a lot of definitions of software, such as “instructions for the computer” (Computer Desktop Encyclopedia) and “programs and procedures required to enable a computer to perform a specific task, as opposed to the physical components” (Oxford English dictionary) to name a few. Commonly it is specified as non-tangible, instructing the physical part of the computer and ‘required’. It doesn’t take much imagination to connect hardware/software with a body/mind analogy. So the software is the immaterial quantity that inhabits and animates some physical entity, the hardware. These are mutually dependent and they don’t have the same identities on their own as an intertwined and greater system, they don’t make sense isolated. What 10101110001101100 or any other stream of ones and zeros means depends on the circuits and components that it is sent through. The circuits on a circuit board exist only to transport and act according to currents put into them, without currents in the electrical components they are just a carefully arranged collection of metals, plastics, chemicals and semiconductors. Katherine Hayles (1999) puts it perfectly: “Humans are not composed of an autonomous mind animating a reified body; they emerge from a sequence of context specific embodiments in which mental and affective processes on the one hand and material and physiological conditions on the other shape each other.” I argue that the same holds true for the computer.

So who are we in relation to the computer? This is where the term wetware comes in. It usually refers to the human factor as it relates to computing. And commonly the term has a negative connotation, as if it’s a source of error and inferior to the reliable hardware and software of the computer. Today there’s no question that wetware, humans, can’t measure up to the performance of computers for rational tasks. We are simply “too slow”, as Stiegler puts it. Even if wetware is inherently inferior it has qualities of irrationality, creativity and non-linear behavior that is close to impossible for a computer to fully attain. I like Winthrop-Young’s concluding explanation of the term: “Wetware designates human insufficiency but also marks the embattled area that the computer must yet master in order to render humans obsolete”.

Personally I’m very intrigued by the thought of everything being possible to reduce to ones and zeros in the digital world and what the physical or physiological analogy to that might be. To me the digital and the computer’s software instructions are more familiar and graspable than however my brain might function.

Tracing the history of these terms is slightly different from some of the other terms we have treated in the class. Before the age of the computer, software and hardware were simpler and more literal. Soft or hard wares, commodities such as textiles or wooden planks. As the computer entered the scene it was given its modern meaning by John Tukey who in a 1958 issue of American Mathematical Monthly stated: “Today the ‘software’ comprising the carefully planned interpretive routines, compilers, and other aspects of automotive programming are at least as important to the modern electronic calculator as its ‘hardware’ of tubes, transistors, wires, tapes and the like”. As computers evolved and became increasingly pervasive the term grew and became more stable and established. What is interesting here is that software is a term most people are familiar with as computer programs or mobile apps. The high-level software is ubiquitous but the low-level software and instructions are concealed increasingly deeper down in the hierarchy. Operating systems are still rather apparent and distinct to users, as the basic software level of interaction that allows them to run their programs and apps. But there are colossal amounts of software of immense complexity required before an operating system can run. This is hidden from view.

The same goes for all the algorithms and software that “runs our world”, which we have touched upon before. It runs our stock market, it runs our traffic, trains, clocks etc. This is also concealed from the public, and most people never stop to think how the algorithm works and what the implications are. Let’s return to Winthrop-Young’s statement again: “Most powerful when least noticed; and they are least noticed when most empowering.”

Is it so? It empowers us by marginalizing us. We do things with the computer but we can’t do things to the computer. Günther Anders calls us reverse-utopians: “While utopians cannot produce what they imagine, we can no longer imagine what we produce”. Kittler would agree and adds a further dimension to the argument. In his essay There Is No Software from ‘97 he argues that all software instructions can be reduced to voltage differences in the hardware, and that the software paradigm is deliberately marginalizing us. User-interfaces and user-friendliness would be terms assuring us that we are still very much in control of and the masters of our own machines but what is really happening is that we are closed off from it all and about to be left behind.

To Kittler there is no software because all operations are just “signifiers of voltage differences”. So he deconstructs software into hardware, and of course his argument is valid. Arjen Mulder turns it around when he talks about the remediation a computer is capable of, and how it can emulate any medium and hardware there ever was. “Emulation is the translation of hardware into software.” So by translating the physical structure of one hardware, it is possible to run it as software a top another piece of hardware. As such, one can emulate any and all machines on a computer. Extending this line of thought, how is hardware relevant? Everything digital can be reduced down to ones and zeros and everything non-digital can be replaced by a digital representation. Andy Clark (2001) thinks software has evolved into something taking on more aspects of an organism, being generative and emulating physicality, and he introduces a new term ‘wideware’ to replace it.

And what about the wetware. Are we in control of our computer tools or are we being controlled and will eventually be left behind? Maybe it’s none of these power-relationships. Just as human hands with opposable thumbs evolved as basic tools were created, those tools were created as the hands grew more versatile. We continue to develop more complex hardware and software to surround us with more powerful computers to exteriorize knowledge and tasks. Of course we shape this technology but this technology also very much shapes how we live our life, and just how this co-evolution proceeds is anyone’s guess. Mark Shepard has produced a series of product prototypes to playfully comment on ubiquitous computing and how individuals might be able to fight fire with fire, so to speak. He calls it a Sentient City Survival Kit:

To conclude, let’s take a quick look at the prevalence of hardware/software in our modern cities.

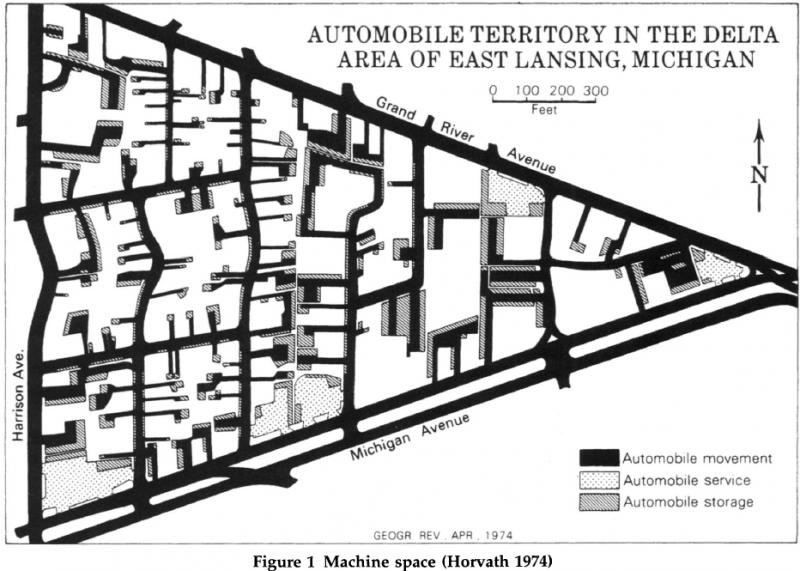

Ron Horvath explored machine space, and true to the time he was mostly focused on automobiles. The problem with doing a similar exploration today is that software usually occupies micro-spaces and that interactions might not be as deliberate and bounded. However, cars remains an interesting object to look at. Software has rendered mechanical diagnostics and repairs outdated and sometimes impossible. A recent implication of this that comes to mind is the Volkswagen diesel emissions scandal. This brings up the question of ethics in software and the responsibility and liability of its creator. Autonomous cars brings up the same question, who should be responsible in the case of an accident? So far we don’t have answers to these questions. I read in the newspaper the other day how Vancouver will re-work their long term transportation and infrastructure plan to accommodate autonomous cars. The current plan was created only a few years ago and didn’t raise these questions. Lastly, how much software are we surrounded with in our cities? It’s difficult to assess but the millennium bug (Y2K) did provide some sense of how the matter was fifteen years ago.

References

Winthrop-Young, Geoffrey. 2010. Hardware/Software/Wetware in Critical Terms for Media Studies. Chicago: The University of Chicago Press.

McLuhan, Marshall. 1964. Understanding media: The Extensions of Man.

Hayles, N. Katherine. 1999. How We Became Posthuman: Virtual Bodies in Cybernetics, Literature, and Informatics. Chicago: University of Chicago Press.

Tukey, John W. 1958. The Teaching of Concrete Mathematics in American Mathematical Monthly 65, no. 1: 1-9.

Anders, Günther. 1981. Die Atomare Drohung: Radikale Überlegungen. Munich: Beck.

Kittler, Friedrich. 1997. Literature, Media, Information Systems. ed. John Johnston. Amsterdam: Overseas Publishers Association.

Mudler, Arjen. 2006. Media in Theory, Culture and Society 23, nos. 1-2: 289-96.

Clark, Andy. 2001. Mindware – An introduction to the philosophy of cognitive science. Oxford: Oxford University Press.

Hovarth, Ron. 1974. Machine Space in The Geographical Review LXIV 166-87.

Works

Richards, Catherine. 1995. Curiosity Cabinet for the End of the Millennium.

Dubosc, Claire. 2014. Error 404: File Not Found. Accessed at: https://vimeo.com/69070555. [accessed 2016-02-22].

Shepard, Mark. 2010. Sentient City Survival Kit. Accessed at: https://vimeo.com/44864756. [accessed 2016-02-22].

Leave me a Comment

You must be logged in to post a comment.