Abstract

Fluid Dynamics is a four channel soundscape composition. The piece uses field recordings from the Vancouver area – predominantly water sounds. These found materials were processed and edited using custom made software developed in the Max MSP environment, experimenting with three techniques: granular synthesis, modal synthesis and convolution. The creative process of composing the piece unfolded through iterative cycles of listening, transforming, and editing. Importantly, coding has been an intrinsic part of these creative cycles, as algorithms were developed and tuned to disclose qualities and properties already present in the source materials.

Keywords

Soundscape composition, found materials, finding, listening, granular synthesis, modal synthesis.

Source Materials

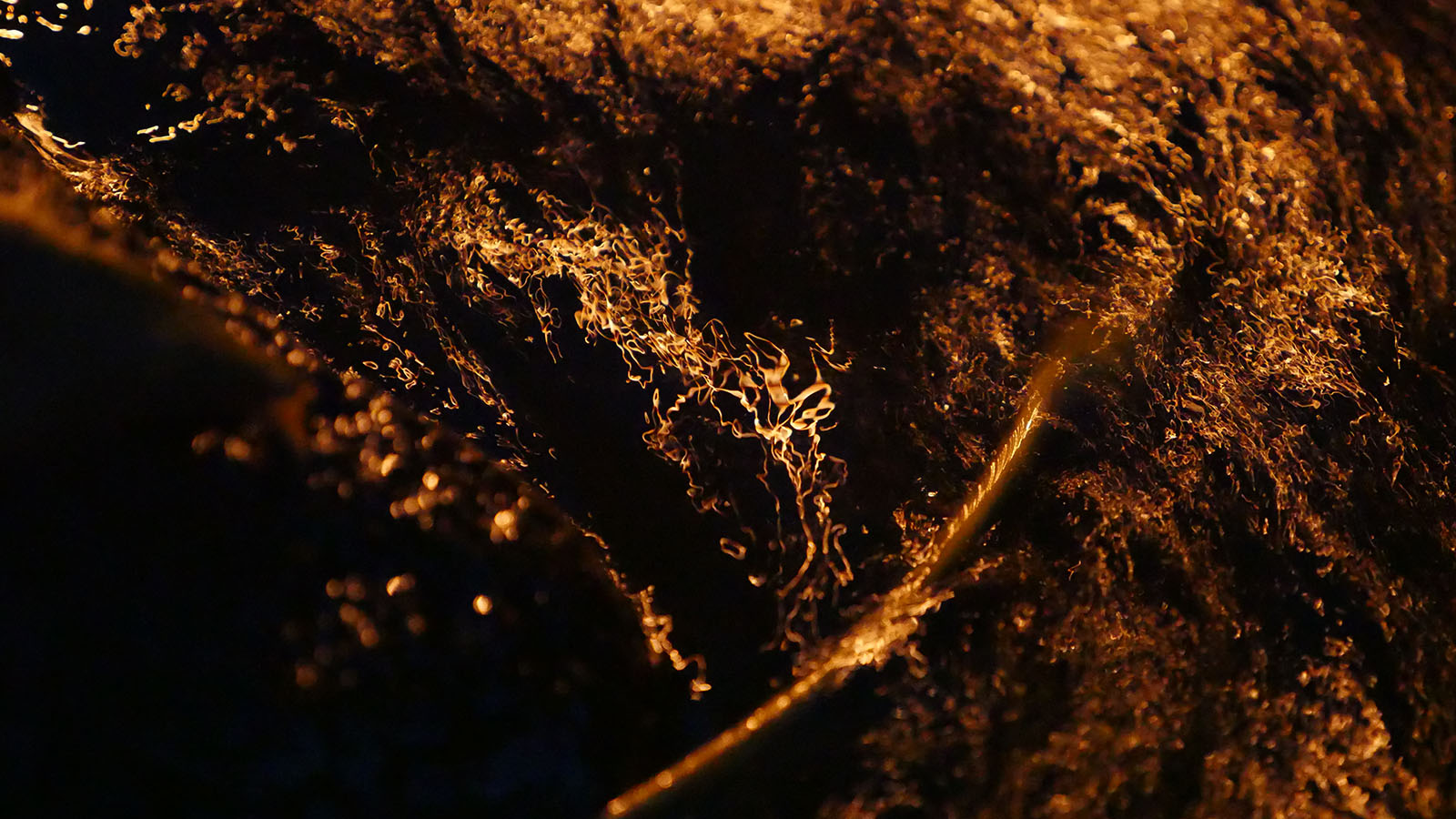

The piece is based mainly on three field recordings from the Vancouver area, three types of water sounds with characteristic and contrasted aural signatures:

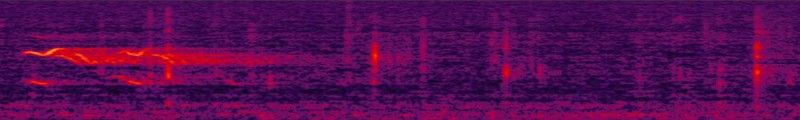

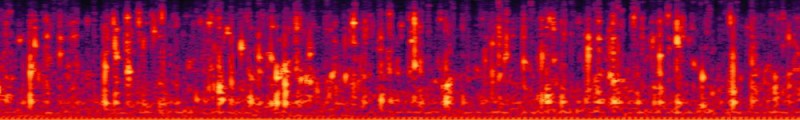

Soft rain sounds, recorded on Burnaby Mountain, with a sparse density of impacts, and a light background ambiance from surrounding leaves and wind. The longer pattern in the spectrogram is a birdsong, showing a typical pitched and melodic structure.

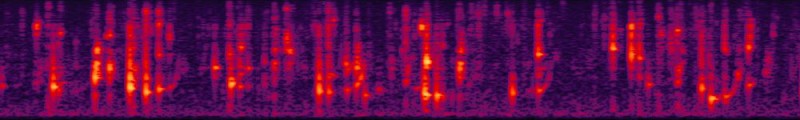

A light trickle, recorded at Wreck Beach. The density and energy of sound impacts are higher. But each droplet sound can still be distinguished as a discrete event, at least when magnified with a sound editor.

A light trickle, recorded at Wreck Beach. The density and energy of sound impacts are higher. But each droplet sound can still be distinguished as a discrete event, at least when magnified with a sound editor.

A stronger trickle, recorded at Lighthouse Park, overshadowed by the rumbling propeller of a float plane traversing the sky. The water sounds themselves are more intense, the discrete impacts of droplets no longer separable.

A stronger trickle, recorded at Lighthouse Park, overshadowed by the rumbling propeller of a float plane traversing the sky. The water sounds themselves are more intense, the discrete impacts of droplets no longer separable.

Techniques

A combination of three techniques is used: granular synthesis, modal synthesis and convolution.

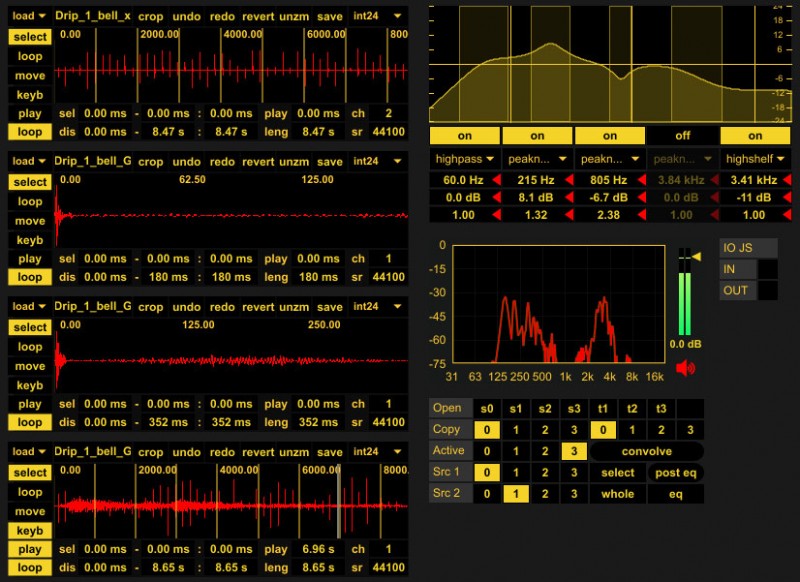

Granular synthesis combines dense clouds of sound grains to compose complex sound masses. The grains are typically short audio segments of less than 100 milliseconds. Their density can range from a few hundred to a few thousand per seconds.

Modal synthesis models a sounding object by analyzing its resonant frequencies. This spectral signature depends mostly on the shape and material properties of the object. The model is then implemented as a bank of resonators, each tuned to a specific frequency.

Convolution is usually used to simulate the reverberant properties of a space. An impulse response is first recorded: a sharp sound like a clap and its pattern of decay as it bounces and eventually dissipates. The impulse response characterizes the acoustic properties of the space, and convolving another sound with it simulates the reverberations which would transform the sound.

Software tools were developed for each technique. The algorithms were coded in C, and then integrated into the Max MSP visual programming environment.